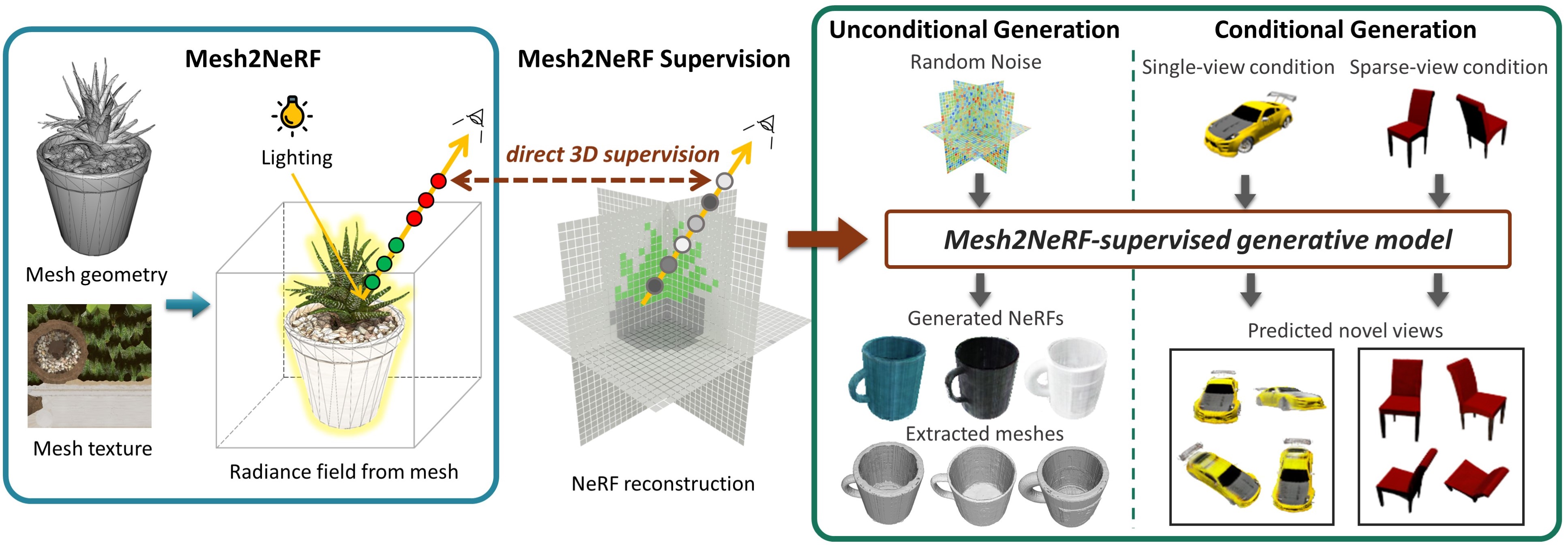

We present Mesh2NeRF, an approach to derive ground-truth radiance fields from textured meshes for 3D generation tasks. Many 3D generative approaches represent 3D scenes as radiance fields for training. Their ground-truth radiance fields are usually fitted from multi-view renderings from a large-scale synthetic 3D dataset, which often results in artifacts due to occlusions or under-fitting issues. In Mesh2NeRF, we propose an analytic solution to directly obtain ground-truth radiance fields from 3D meshes, characterizing the density field with an occupancy function featuring a defined surface thickness, and determining view-dependent color through a reflection function considering both the mesh and environment lighting. Mesh2NeRF extracts accurate radiance fields which provides direct supervision for training generative NeRFs and single scene representation. We validate the effectiveness of Mesh2NeRF across various tasks, achieving a noteworthy 3.12dB improvement in PSNR for view synthesis in single scene representation on the ABO dataset, a 0.69 PSNR enhancement in the single-view conditional generation of ShapeNet Cars, and notably improved mesh extraction from NeRF in the unconditional generation of Objaverse Mugs.

Mesh2NeRF can be applied to supervision of a single scene radiance field representation from a given mesh. We compare Mesh2NeRF NGP with Instant NGP.

| Instant NGP | Ours (Mesh2NeRF NGP) | Ground Truth |

Mesh2NeRF can be applied to supervise NeRF generation tasks. We compare Mesh2NeRF supervision to SSDNeRF with traditional NeRF supervision on conditional NeRF generation tasks.

@article{chen2024mesh2nerf,

title={Mesh2NeRF: Direct Mesh Supervision for Neural Radiance Field Representation and Generation},

author={Chen, Yujin and Nie, Yinyu and Ummenhofer, Benjamin and Birkl, Reiner and Paulitsch, Michael and M{\"u}ller, Matthias and Nie{\ss}ner, Matthias},

journal={arXiv preprint arXiv:2403.19319},

year={2024}

}